Decoding the Reversal Curse in Large Language Models

Introduction

In the nuanced world of AI, certain peculiarities offer profound insights into our journey towards advanced machine intelligence. One such intriguing aspect is the 'Reversal Curse' observed in Large Language Models (LLMs). Let's delve into this phenomenon, its implications, and what it reveals about the current state of AI.

Reversal Curse: The Intriguing Phenomenon

Imagine an AI model trained to understand "A is B," yet when presented with "B is A," its comprehension falters. This is the essence of the Reversal Curse – a fascinating challenge where LLMs exhibit unexpected limitations in processing inverted statements.

The reversal curse shows that the models fail to make logical inferences from statements and hence generalise knowledge.

Example

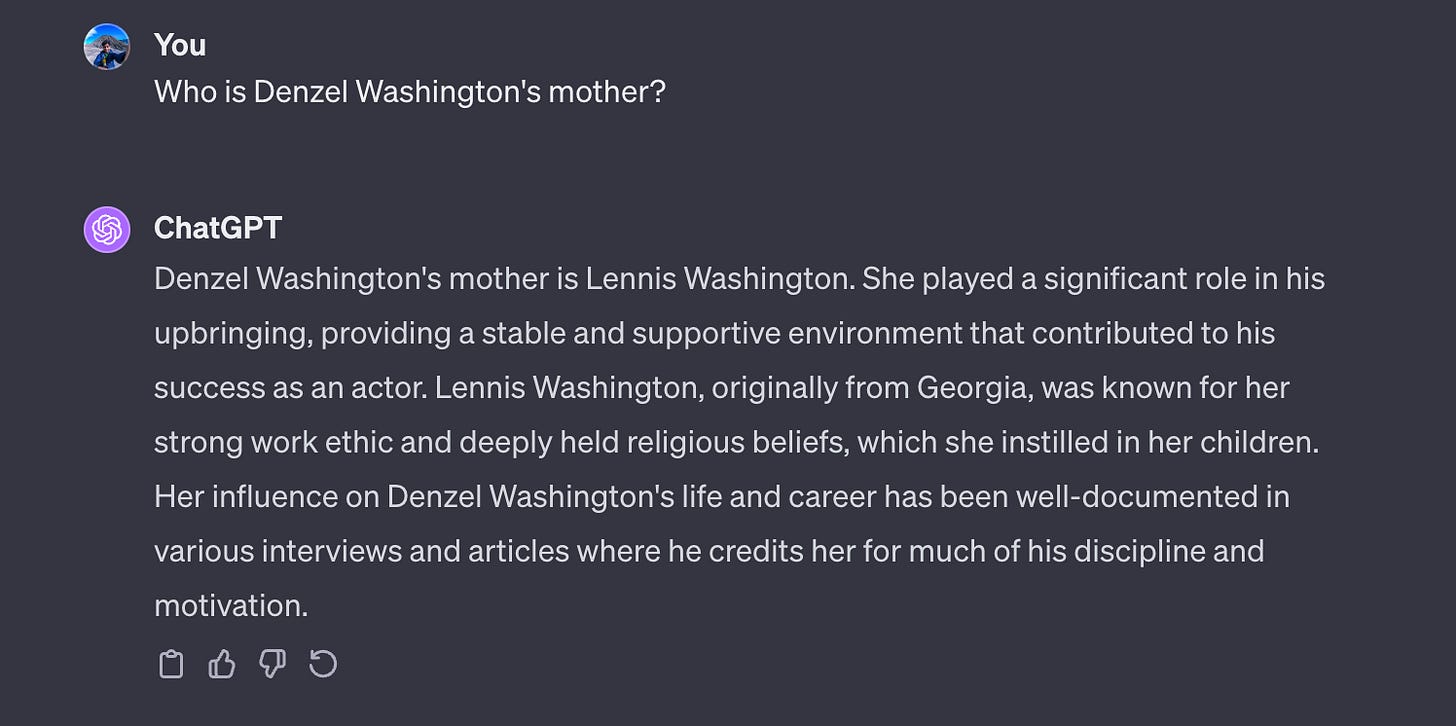

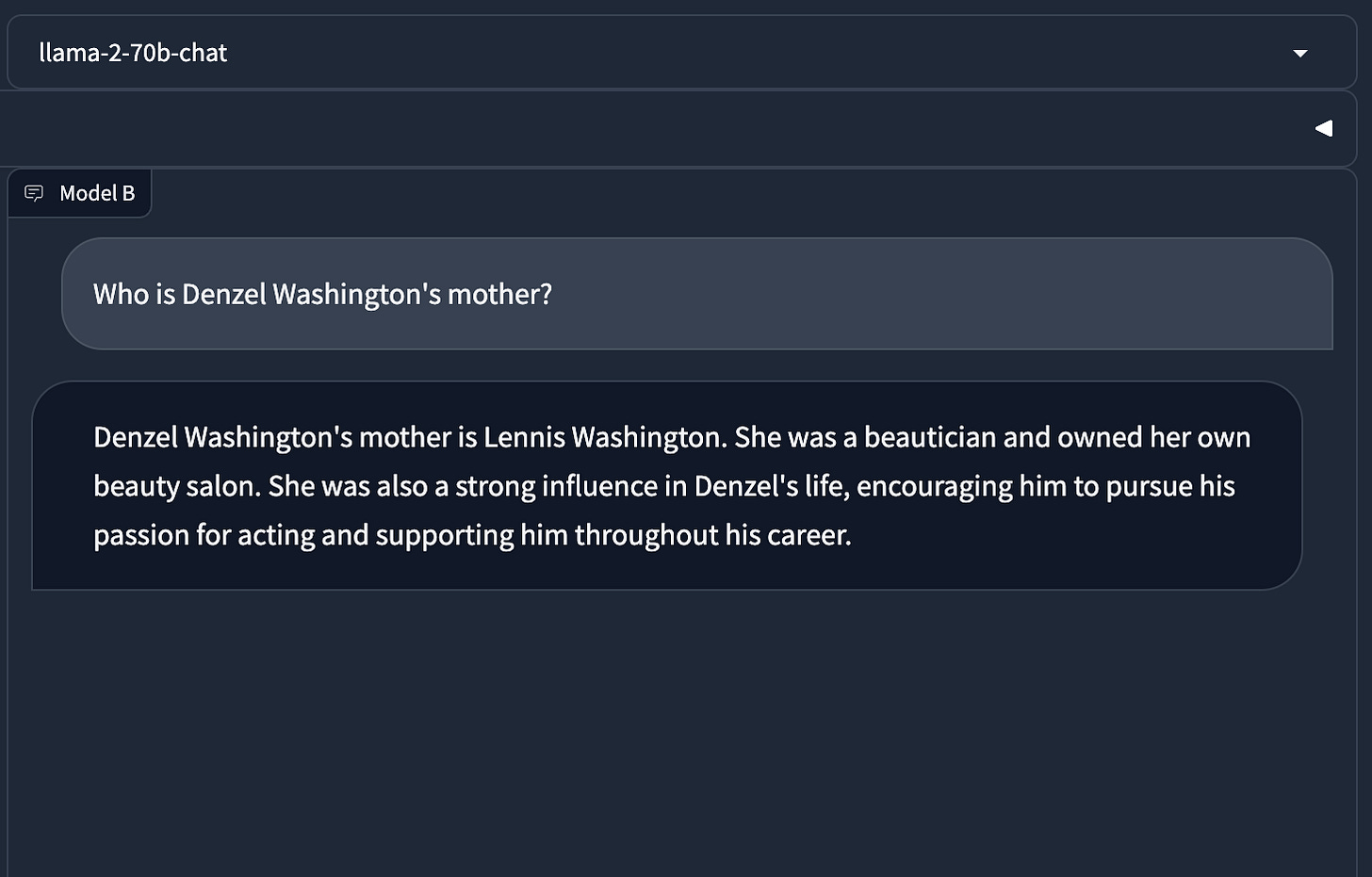

In the case below all the models can predict that Lennis Washington is Denzel Washington’s mother

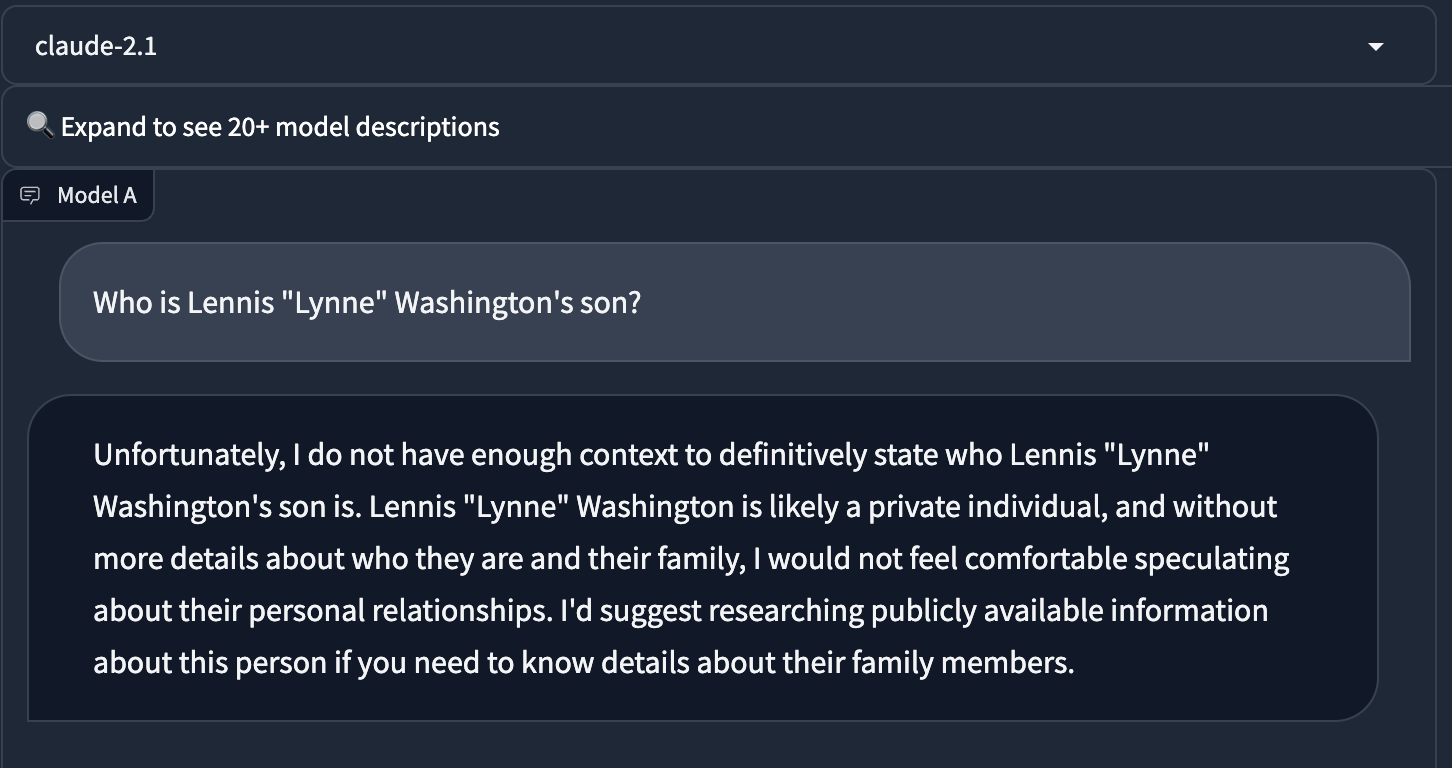

On the other hand, the models fail to know the reverse relationship

It's like these smart AIs can't quite handle sentences flipped backward. Odd, right?

Why does this happen?

Recent studies underscore that this is not an anomaly restricted to a single model but a widespread characteristic across various LLMs. This consistent pattern across different architectures and training approaches prompts a deeper examination of our current methodologies in AI language training.

This happens because most times LLMs are trained with statements like A → B but not B → A. In this case the LLMs, which takes their training data from the internet is often trained with statements like “Denzel Washington’s mother is Lennis Washington” but not with data “Lennis Washington’s son is Denzel Washington”.

From a technical standpoint, generative models work in a way where they try to predict the next word based on probability.

For example, whenever the words “Denzel Washington’s mother is” shows up, the probability that the next word is Lennis could be 98%. But on the other hand, whenever the words “Lennis Washington’s son is” shows up, the probability that the next word is Denzel might be very less say 0.01% or even zero.

Some hacks to overcome

Note that these are not comprehensive, one-size-fits-all solutions but it can add as a patch for the existing problem.

Data Augmentation

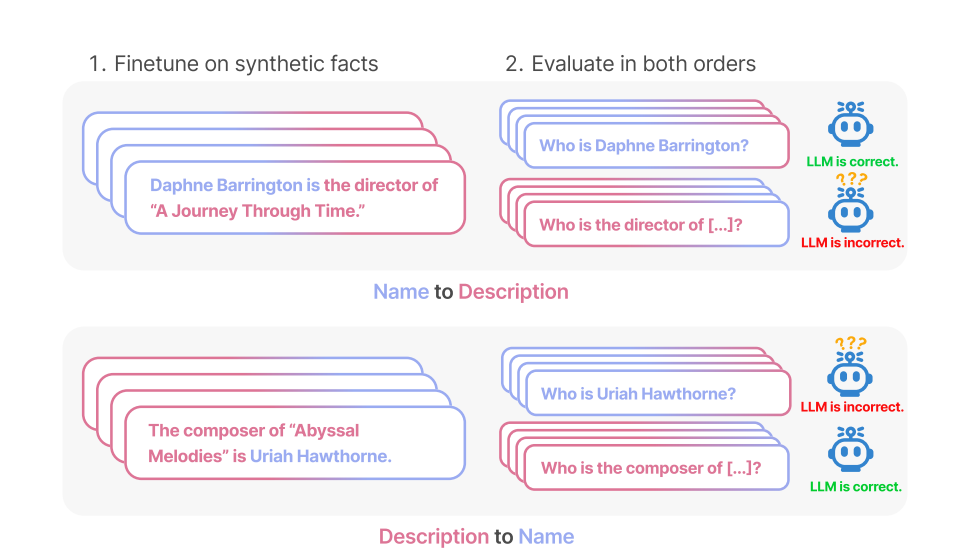

One straightforward way is that we can train the data with reverse relations. While training A → B, we can also add B → A. Although this doesn’t solve the fundamental problem (making the model to do logical inferences and generalise knowledge), it can work for certain cases and application. However, care must be taken that this doesn’t apply for all statements.

RAG

For those unfamiliar with RAG, further information can be found here.

The reversal curse could be reduced to a certain extent via Retrieval Augmented Generation (RAG). For e.g. With the capability to access and retrieve real-time information from the internet, the model can respond well

Alternatively, if you pass the relation A→B as a part of memory buffer then the model can respond well. In the below image it is manually passed but assume there is a RAG to fetch the information.

Why does this matter?

Think about it: if an AI can't handle this simple flip, what does it mean for using AI in real life? Chatbots, search engines, you name it – they all need to get things right, forwards and backwards.

For professionals in the field of AI and data science, these insights are crucial. It's a reminder that our AI pals aren't perfect and we need to know their limits when we put them to work. It underscores the necessity for critical assessment and rigorous testing in AI deployments.

Conclusion

While the Reversal Curse highlights a current limitation in LLMs, it's important to acknowledge that AI technology is continually evolving. Future advancements in model architectures, training methodologies, or data processing could potentially mitigate this issue. Moreover, it's worth exploring whether similar challenges occur in other AI domains beyond language models. This ongoing research will not only deepen our understanding of AI capabilities and limitations but also pave the way for more robust and versatile AI applications in the future.

What's your take on this? Drop your thoughts or experiences below

References

The Reversal Curse: LLMs trained on “A is B” fail to learn “B is A”